AWS S3 Static Website Frustrations

Hosting a static website on AWS S3 is awesome... but pushing updates was getting frustrating...

When I decided to put up this web site and the www.jakhak.com web app, I decided to make them serverless for several reasons:

- It’s the new thing (well fairly new), and I wanted to see how it works…

- I wanted to get experience with the serverless way of doing things… for example using Lambda to create micro-services, and…

- Well… no server to maintain! Awesome.

Incidentally, jakhak.com uses VueJS and micro-services implemented with AWS Lambda. However, for this site I wanted a blogging framework. WordPress won’t work this way… at least not out of the box. It needs to run on a web server. So in this case I’ve used Hugo and one of the standard themes. I’m getting used to it and it’s working fine for what I’m doing here.

But alas I digress…

Anyway, building the static, serverless websites in general has gone great.

But when I would make changes I had some trouble with the way that AWS caches content… i.e., getting my updates to actually show up in the live version of the website. I’d jump through these hoops of deleting the changed file from S3, waiting for a few minutes, uploading the updated file, and then going to the AWS Cloudfront Management Console and “invalidating” the file so that CloudFront would re-cache it. Then I’d clear the cache in my browser and reload the website and hope to see the new content.

After all these steps, it still seemed like the updates would occur intermittenly. Sometimes I’d still see the old content… and I wasn’t quite sure where that content was still being cached.

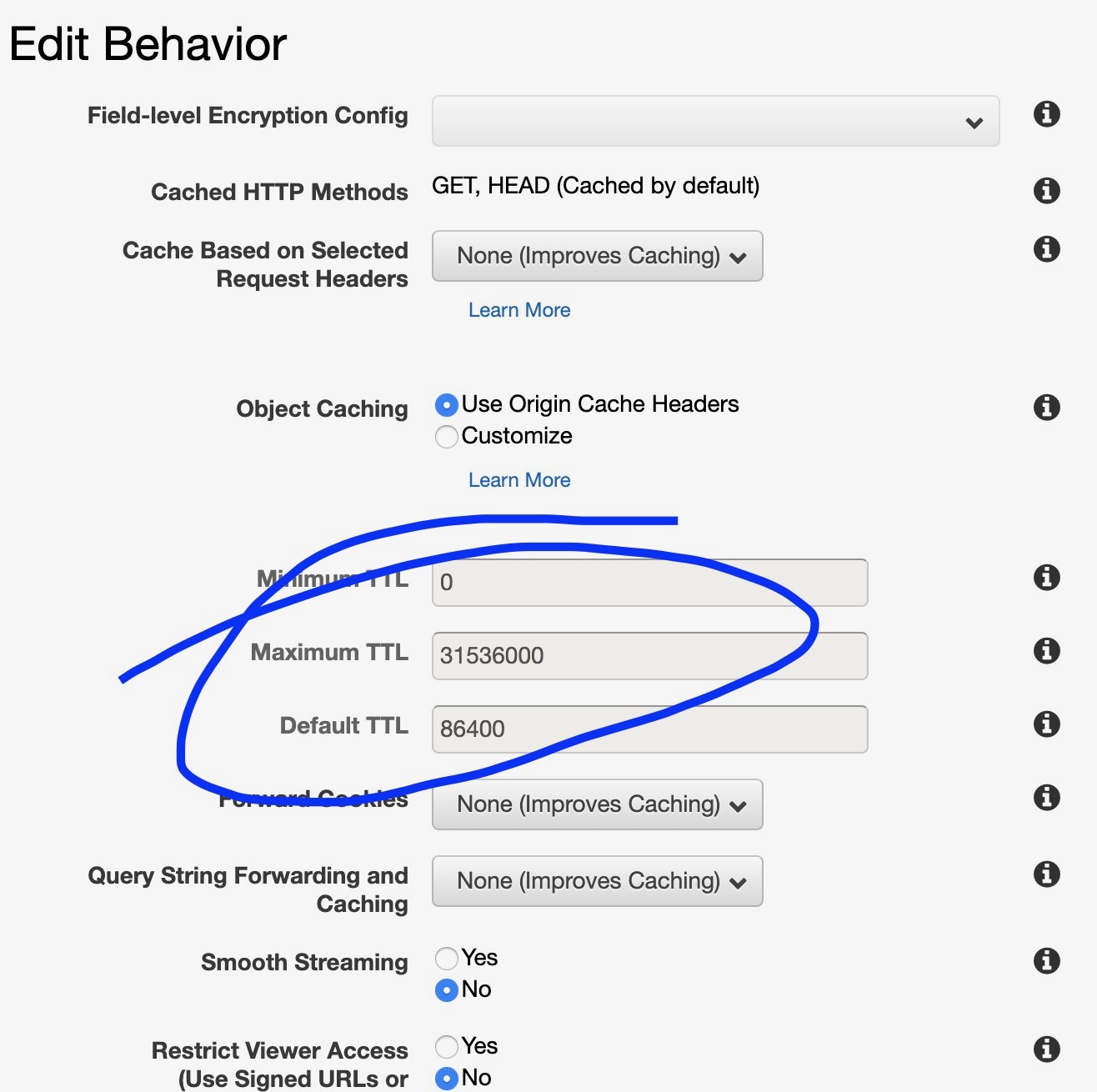

When setting up the CloudFront Distribution, there seemed to be a field where the maximum caching time could be altered. But the process wouldn’t let me actually alter those values. And the values were big… 31536000 seconds… which means CloudFront could hold on to the cached content for up to a year!

Even now, by navigating to the “Edit Behavior” functionality on the CloudFront Console (in the Console, click on the Distribution, then click the “Behaviors” tab, then select the record and click “Edit”), I can see the default value for content caching of up to a year.

This was puzzling and frustrating, and at first it didn’t seem like Google had any answers.

However, thanks to a couple of StackOverflow posts, I think I’ve finally discovered a solution that works, at least in my case.

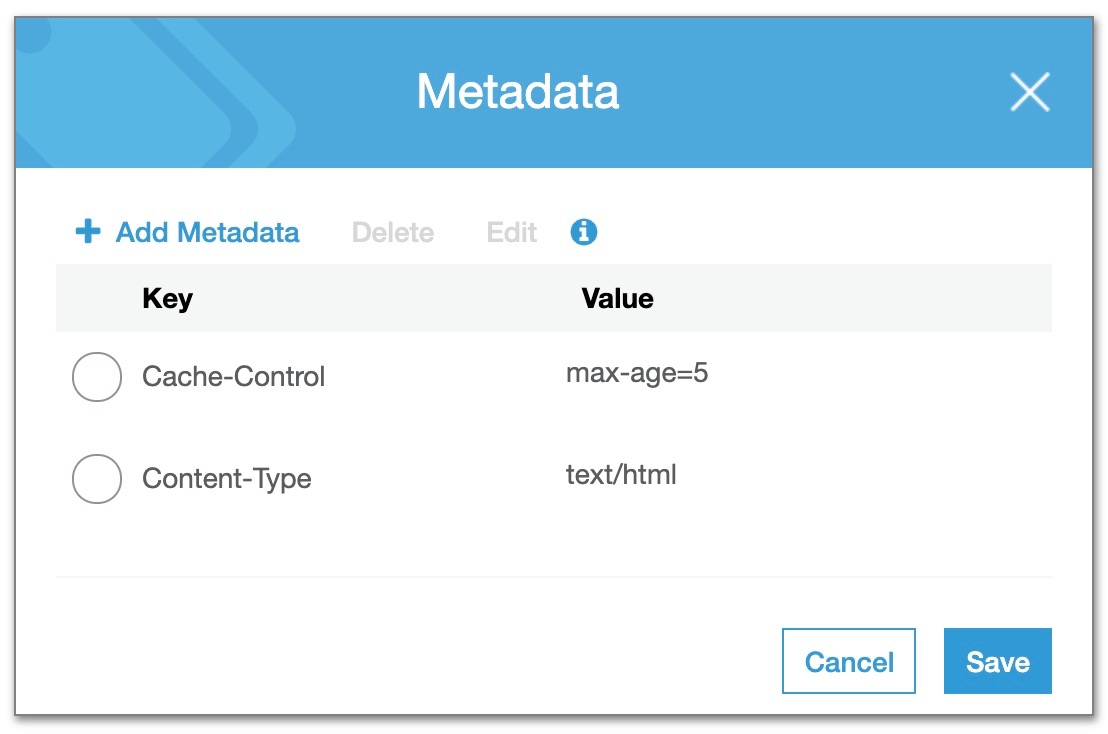

It’s possible to set the caching limit not in CloudFront, but in S3 itself. You can see it/set it in the S3 Console by navigating to a specific object (like an HTML page or an image), clicking on the “Properties” tab for that image, and then selecting the “Metadata” card.

However, it’s not convenient to set the caching limit for a lot of objects in S3 this way. Luckily there is a way to do it in the AWS CLI with the following command:

aws s3 sync /path s3://yourbucket/ –cache-control max-age=5

This will set the caching limit to 5 seconds, so that the live website is always up to date. Now when I refresh the page in my browser, it immediately shows the updated content that I just pushed to the website!

Of course with the sync command, you’ll need to have the objects in your local folder that you’re syncing. Not to fear, though… there’s a solution to do it without syncing as well. For more information, check out these StackOverflow posts:

How to Add Cache Control in AWS S3

Set Cache Control for Entire S3 Bucket AutomaticallyOne note about this method… this will defeat the benefit of CloudFront’s CDN. If you’re concerned about this, however, you can always set the caching limit back to something higher when the content is stable. My web sites are not complex, so this works fine for me for now. Maybe it will suit your needs as well!